BERKELEY, CA.- In the past year, lockdowns and other COVID-19 safety measures have made online shopping more popular than ever, but the skyrocketing demand is leaving many retailers struggling to fulfill orders while ensuring the safety of their warehouse employees.

Researchers at the

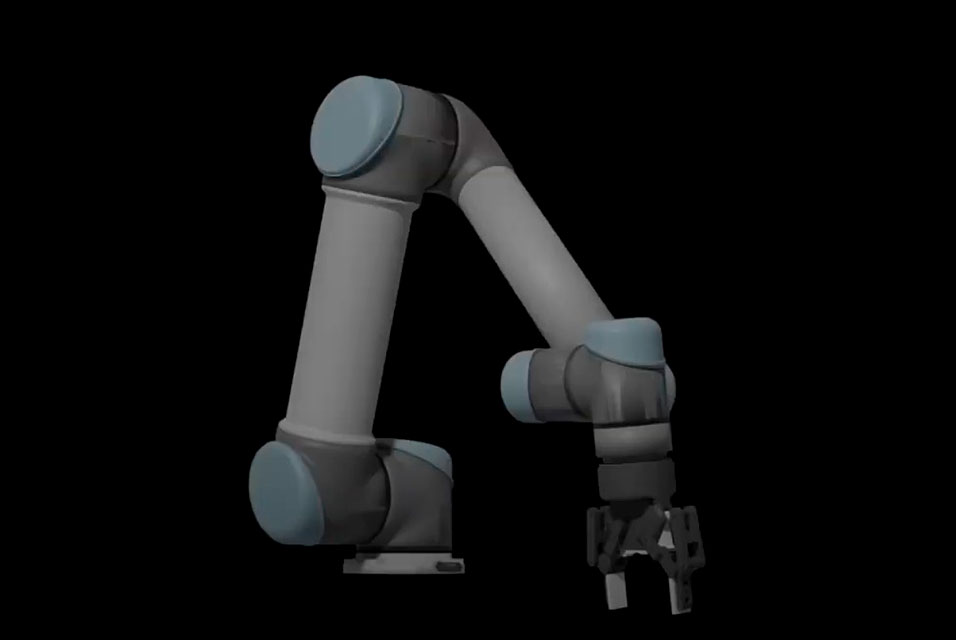

University of California, Berkeley, have created new artificial intelligence software that gives robots the speed and skill to grasp and smoothly move objects, making it feasible for them to soon assist humans in warehouse environments. The technology is described in a paper published online in the journal Science Robotics.

Automating warehouse tasks can be challenging because many actions that come naturally to humans — like deciding where and how to pick up different types of objects and then coordinating the shoulder, arm and wrist movements needed to move each object from one location to another — are actually quite difficult for robots. Robotic motion also tends to be jerky, which can increase the risk of damaging both the products and the robots.

“Warehouses are still operated primarily by humans, because it’s still very hard for robots to reliably grasp many different objects,” said Ken Goldberg, William S. Floyd Jr. Distinguished Chair in Engineering at UC Berkeley and senior author of the study. “In an automobile assembly line, the same motion is repeated over and over again, so that it can be automated. But in a warehouse, every order is different.”

In earlier work, Goldberg and UC Berkeley postdoctoral researcher Jeffrey Ichnowski created a Grasp-Optimized Motion Planner that could compute both how a robot should pick up an object and how it should move to transfer the object from one location to another.

However, the motions generated by this planner were jerky. While the parameters of the software could be tweaked to generate smoother motions, these calculations took an average of about half a minute to compute.

In the new study, Goldberg and Ichnowski, in collaboration with UC Berkeley graduate student Yahav Avigal and undergraduate student Vishal Satish, dramatically sped up the computing time of the motion planner by integrating a deep learning neural network.

Neural networks allow a robot to learn from examples. Later, the robot can often generalize to similar objects and motions.

However, these approximations aren’t always accurate enough. Goldberg and Ichnowski found that the approximation generated by the neural network could then be optimized using the motion planner.

“The neural network takes only a few milliseconds to compute an approximate motion. It’s very fast, but it’s inaccurate,” Ichnowski said. “However, if we then feed that approximation into the motion planner, the motion planner only needs a few iterations to compute the final motion.”

By combining the neural network with the motion planner, the team cut average computation time from 29 seconds to 80 milliseconds, or less than one-tenth of a second.

Goldberg predicts that, with this and other advances in robotic technology, robots could be assisting in warehouse environments in the next few years.

“Shopping for groceries, pharmaceuticals, clothing and many other things has changed as a result of COVID-19, and people are probably going to continue shopping this way even after the pandemic is over,” Goldberg said. “This is an exciting new opportunity for robots to support human workers.”

This work was supported, in part, by the National Science Foundation’s National Robotics Initiative Award #1734633: Scalable Collaborative Human-Robot Learning (SCHooL) and by donations from Google and the Toyota Research Institute Inc.